Using AI To Analyze And Transform Repetitive Scatological Data Into A Podcast

Table of Contents

Data Collection and Preprocessing: Laying the Foundation for AI Analysis

The first step in leveraging AI for scatological data analysis is meticulous data collection and preprocessing. This phase presents unique challenges due to the nature of the data. Accurate and reliable data is crucial for successful AI analysis. Inconsistent formatting, missing values, and noisy data can significantly impact the accuracy of the results.

-

Challenges: Scatological data collection can be complex, requiring specialized methods and careful consideration of hygiene and safety protocols. Data might be incomplete, inconsistent in format, and potentially contain errors.

-

Standardization and Formatting: Consistent data formatting is essential. This involves establishing clear guidelines for data entry, ensuring uniformity across different sources, and converting data into a suitable format for AI algorithms (e.g., CSV, JSON).

-

Data Cleaning Techniques: Several techniques are employed to clean and prepare the data for AI processing:

- Noise reduction: Removing irrelevant or erroneous data points.

- Outlier detection: Identifying and handling unusual data points that may skew results.

- Missing data imputation: Filling in missing values using statistical methods or machine learning techniques.

-

Tools and Techniques:

- Methods for collecting reliable scatological data: Employing standardized sampling methods, utilizing appropriate collection tools, and ensuring proper documentation are vital.

- Software/Libraries: Python libraries like Pandas and Scikit-learn provide powerful tools for data cleaning and preprocessing. R also offers similar capabilities.

- Strategies for handling missing or incomplete data: Methods such as mean/median imputation, k-Nearest Neighbors imputation, and multiple imputation can be used to address missing data.

AI Techniques for Scatological Data Analysis: Unveiling Hidden Insights

Once the data is clean and preprocessed, various AI techniques can be applied to uncover hidden patterns and trends. The choice of technique depends on the specific research questions and the nature of the data.

-

Suitable AI Techniques:

- Machine Learning: Algorithms like clustering (e.g., K-means, hierarchical clustering) can group similar data points, revealing underlying patterns in scatological characteristics.

- Natural Language Processing (NLP): If the data includes textual descriptions, NLP can be used to analyze sentiment, identify key themes, and extract relevant information.

- Regression Analysis: This can be used to model relationships between different variables in the data, potentially uncovering correlations that could inform podcast narratives.

- Classification: This technique can be used to categorize different types of scatological data, allowing for a more structured analysis.

-

Algorithm Application:

- Clustering: Identify groups of individuals or samples with similar scatological profiles.

- Regression: Model the relationship between diet and scatological output.

- Classification: Categorize scatological samples based on specific characteristics.

-

Advantages and Limitations: Each technique has its own strengths and weaknesses. For example, clustering algorithms are excellent for identifying groups, but they may not reveal the underlying reasons for these groupings. Regression analysis can reveal relationships between variables, but it may not be suitable for all types of data.

Transforming Data into Podcast Content: From Numbers to Narrative

The analyzed data needs to be transformed into an engaging podcast narrative. This requires skillful storytelling and creative sound design.

-

Narrative Structure: Structure the podcast episode around key data-driven insights. Consider using a chronological approach, thematic organization, or a problem/solution format.

-

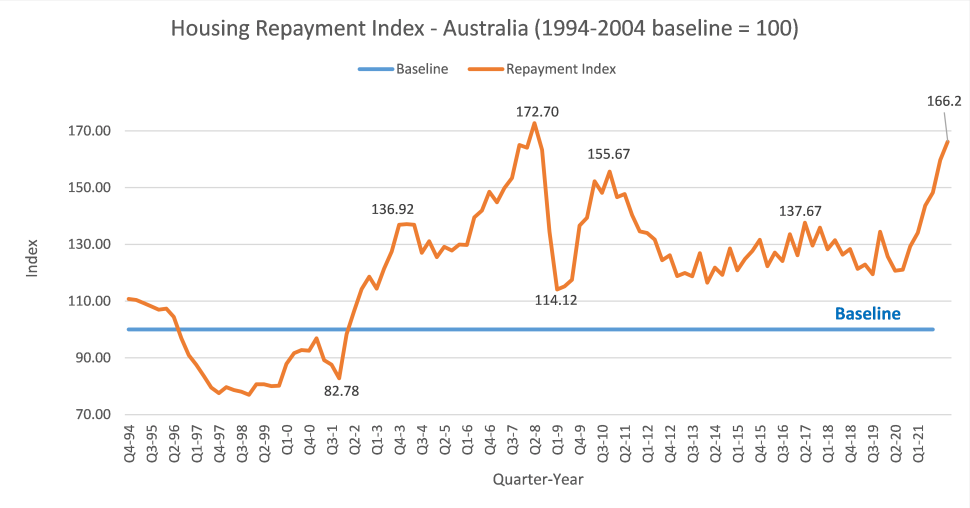

Data Visualization in Audio: Convert data visualizations (charts, graphs) into accessible audio formats. This might involve using descriptive language, sound effects, or even custom-created audio visualizations.

-

Sound Design: Use sound design to enhance the emotional impact and memorability of the podcast. Soundscapes, music, and sound effects can help create a captivating listening experience.

-

Successful Examples: Research podcasts that effectively use data-driven narratives to inspire your approach. Look at successful science podcasts or investigative journalism podcasts for examples of data integration.

Ethical Considerations and Data Privacy: Responsible AI Implementation

Handling sensitive scatological data requires a strong ethical framework and adherence to data privacy regulations.

-

Data Anonymization: Employ strong anonymization techniques to protect individual privacy. This could include removing identifying information or using data aggregation methods.

-

Bias Mitigation: Be aware of potential biases in the data and the AI algorithms used. Address these biases proactively to ensure fair and accurate results.

-

Data Privacy Regulations: Comply with relevant data privacy regulations, such as GDPR (General Data Protection Regulation) or HIPAA (Health Insurance Portability and Accountability Act).

-

Best Practices:

- Data minimization: Collect only the data necessary for the analysis.

- Access control: Restrict access to the data to authorized personnel.

- Regular audits: Conduct regular audits to ensure compliance with data privacy regulations.

Conclusion: Harnessing the Power of AI for Scatological Podcast Success

AI analysis of scatological data for podcasts offers a unique opportunity to create engaging and insightful content. By carefully collecting, preprocessing, and analyzing the data, and then transforming it into a compelling narrative, podcasters can tap into a previously unexplored source of fascinating stories. Remember the importance of ethical considerations and data privacy throughout the process. Start leveraging the power of AI analysis of scatological data for podcasts today and unlock a whole new world of podcasting possibilities!

Featured Posts

-

Chinese Cars Quality Affordability And Global Impact

Apr 26, 2025

Chinese Cars Quality Affordability And Global Impact

Apr 26, 2025 -

The Rise Of Chinese Vehicles A Competitive Analysis

Apr 26, 2025

The Rise Of Chinese Vehicles A Competitive Analysis

Apr 26, 2025 -

Should You Return To A Company That Laid You Off A Practical Guide

Apr 26, 2025

Should You Return To A Company That Laid You Off A Practical Guide

Apr 26, 2025 -

Ahmed Hassanein A Potential Groundbreaking Nfl Draft Selection For Egypt

Apr 26, 2025

Ahmed Hassanein A Potential Groundbreaking Nfl Draft Selection For Egypt

Apr 26, 2025 -

Sinners How Cinematography Showcases The Mississippi Deltas Expansive Landscape

Apr 26, 2025

Sinners How Cinematography Showcases The Mississippi Deltas Expansive Landscape

Apr 26, 2025