Mining Meaning From Mundane Data: An AI Approach To Podcast Production Using Scatological Documents

Table of Contents

H2: Data Acquisition and Preprocessing: The Foundation of Meaningful Insights

Before we can leverage the power of AI, we need the right data. This section focuses on the crucial first steps: acquiring and preparing our "scatological documents" for analysis.

H3: Identifying and Sourcing Scatological Documents:

Finding suitable data sources requires careful consideration and a sensitivity to ethical implications. Anonymization and responsible data handling are paramount. Potential sources include:

- Historical archives: Letters, diaries, and official records often contain insightful social commentary interwoven with references to sanitation practices or attitudes towards waste.

- Literary works: From ancient texts to modern novels, literature provides a rich tapestry of cultural perspectives on excretion and hygiene, offering valuable contextual data.

- Medical journals (with appropriate de-identification): Historical medical texts may provide data on disease prevalence and sanitation practices.

- Government reports and census data: These sources can reveal insights into public health, sanitation infrastructure, and societal attitudes towards waste management throughout history.

Anonymization techniques are crucial. This might involve removing names, locations, and other identifying details before analysis. The ethical handling of sensitive data is crucial in this process.

H3: Data Cleaning and Preparation:

Raw data is rarely usable directly. Scatological documents, in particular, may require significant cleaning and preprocessing:

- Removing irrelevant information: This may involve filtering out sections unrelated to the chosen research focus (e.g., removing passages unrelated to sanitation or waste).

- Handling missing data: Imputation techniques, such as using the mean or median values, can help address missing entries.

- Text normalization: This includes tasks like converting text to lowercase, removing punctuation, and stemming or lemmatization (reducing words to their root form).

- Using tools like: NLTK (Natural Language Toolkit) or spaCy can significantly streamline this process.

The unique challenges presented by this specific dataset necessitate a careful and methodical approach. The presence of archaic language, inconsistent spellings, and potentially ambiguous terminology demands a robust cleaning strategy.

H2: AI-Powered Analysis: Uncovering Hidden Narratives and Themes

Once our data is clean, we can apply AI techniques to uncover hidden patterns and narratives.

H3: Natural Language Processing (NLP): Deciphering the Language of Waste:

NLP techniques unlock the potential of textual data. Specifically:

- Sentiment analysis: This allows us to gauge the emotional tone surrounding discussions of waste and sanitation. Was the societal response to sanitation primarily fear, disgust, or perhaps acceptance?

- Topic modeling: Techniques like Latent Dirichlet Allocation (LDA) can identify recurring themes and topics within the documents, potentially revealing evolving social attitudes or the influence of specific historical events.

- Named entity recognition (NER): Identify key individuals, places, and organizations mentioned in the context of sanitation and waste management to uncover critical players and historical contexts.

H3: Machine Learning (ML): Predictive Modeling for Podcast Content:

ML can help us predict what our audience might find interesting. For instance:

- Regression models: Might predict the level of audience engagement based on factors like the era discussed or the specific themes explored.

- Classification models: Could categorize podcast episodes by their predicted emotional impact or societal relevance based on data analysis.

- Recommendation systems: Could suggest podcast topics based on successful prior episodes and the identified themes within the “scatological documents” analyzed.

Predictive modeling allows for data-driven decisions in podcast creation, increasing the likelihood of creating engaging and successful content.

H2: From Data to Podcast: Crafting Engaging Content

The final step is transforming the AI-generated insights into captivating podcast episodes.

H3: Storytelling Techniques from Unconventional Sources:

- Creating narratives: The discovered themes and trends can be woven into compelling narratives, providing historical context and unique perspectives on sanitation and waste management.

- Ethical considerations: Always be mindful of the sensitive nature of the data and present information responsibly and ethically. Context is crucial.

- Episode ideas: Analyze topics like the history of sanitation, societal attitudes towards waste across different eras, or the impact of sanitation improvements on public health. We can create episodes exploring these themes with historical accuracy and engaging storytelling.

H3: Audience Engagement and Feedback Loops:

- Collecting feedback: Utilize surveys, comment sections, and social media engagement to gauge audience response.

- Improving the AI: Incorporate audience feedback to refine the AI models, improving the accuracy of future topic predictions and content suggestions.

- Iterative process: Use audience feedback to inform future data analysis and content creation strategies. This data-driven approach ensures that the podcast remains relevant and engaging for the target audience.

3. Conclusion:

This article explored how mining meaning from mundane data, specifically using scatological documents, can revolutionize podcast production through AI. By combining data acquisition, AI-powered analysis, and thoughtful storytelling, podcasters can create unique, engaging, and data-driven content. The key takeaways are the immense potential of unconventional data sources, the power of AI in creative fields, and the importance of responsible data handling and ethical storytelling. Start mining meaning from your own mundane data today and unlock the potential for innovative podcasting! Explore tools like NLTK and spaCy to begin your journey into AI-powered content creation.

Featured Posts

-

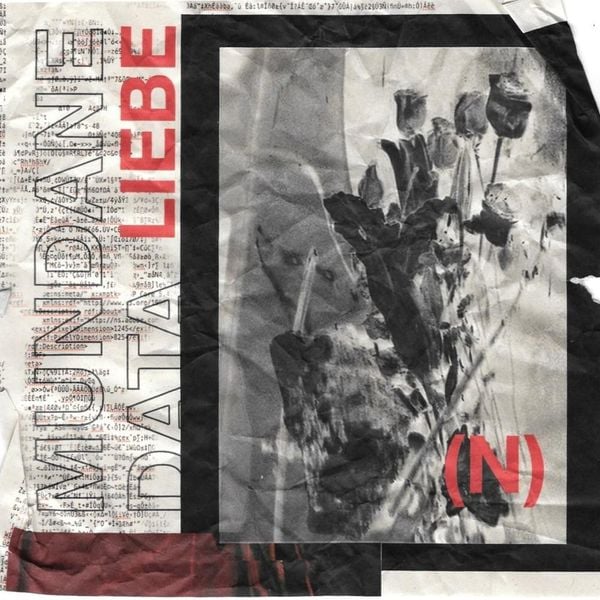

Hegseths Signal Chat Leaked Military Plans With Family

Apr 22, 2025

Hegseths Signal Chat Leaked Military Plans With Family

Apr 22, 2025 -

Mapping The Countrys Emerging Business Hubs

Apr 22, 2025

Mapping The Countrys Emerging Business Hubs

Apr 22, 2025 -

Secret Service Investigation Concludes Cocaine Found At White House

Apr 22, 2025

Secret Service Investigation Concludes Cocaine Found At White House

Apr 22, 2025 -

The Difficulties Of Automating Nike Sneaker Assembly

Apr 22, 2025

The Difficulties Of Automating Nike Sneaker Assembly

Apr 22, 2025 -

Trumps Economic Agenda Who Pays The Price

Apr 22, 2025

Trumps Economic Agenda Who Pays The Price

Apr 22, 2025